Deep neural networks can be vulnerable to adversarial attacks delivered through artificially manipulated images, causing models to return highly inaccurate results, but with a high degree of confidence. While these attacks typically require the attacker to have some control over the model’s input, there's a much easier type of adversarial attack that is rarely discussed. Adversaries can use “natural” adversarial images—unmodified, real-world images that can similarly cause a model to fail.

Researchers at Scale AI created a dataset of natural adversarial objects (NAOs) to examine the performance of state-of-the-art object detection models on difficult-to-classify images. Our NAO dataset includes 7,934 images and 9,943 objects, all of which are unmodified and naturally occurring in the real world. We then used this dataset to assess the robustness of cutting-edge detection methods and to examine why models underperformed on certain NAOs. Here’s how we created our dataset and the vulnerabilities that we subsequently uncovered within current state-of-the art detection models. By developing their own NAO dataset aimed at evaluating their target dataset or model, your researchers and engineers can ensure that they’re creating robust deep learning solutions that are less vulnerable to NAO attacks.

An NAO Dataset Can Provide a Difficult Test of Robustness for Detection Models

Our NAO dataset is designed to test the performance of object detection models on difficult-to-classify, real-world data, focusing on the evaluation of models trained on Microsoft COCO: Common Objects in Context (MSCOCO), a popular dataset for object detection models. The NAO dataset contains edge cases, which include difficult-to-classify objects that are in MSCOCO’s data labels, and out-of-distribution examples, which include objects that aren't included in MSCOCO’s labels. With this dataset, we explored the overall mean average precision (mAP) of MSCOCO-trained models on the MSCOCO validation and test-dev sets as well as on our NAO dataset. The models performed significantly worse when predicting objects in our dataset.

How We Built Our Dataset

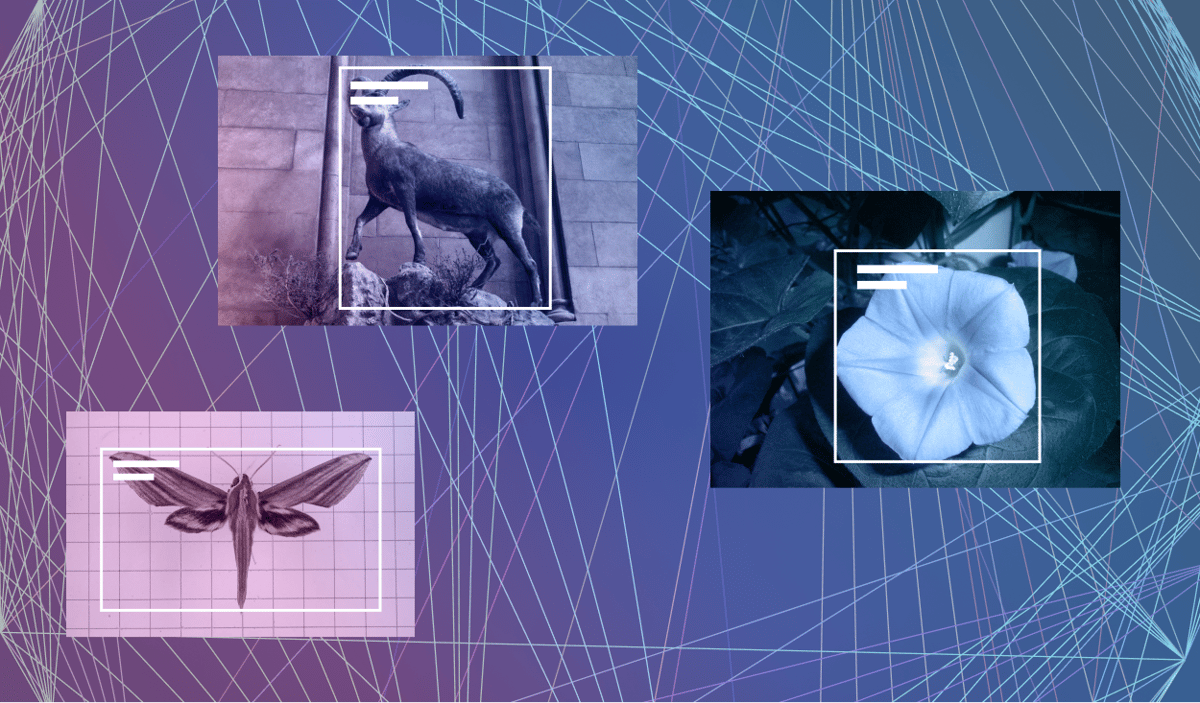

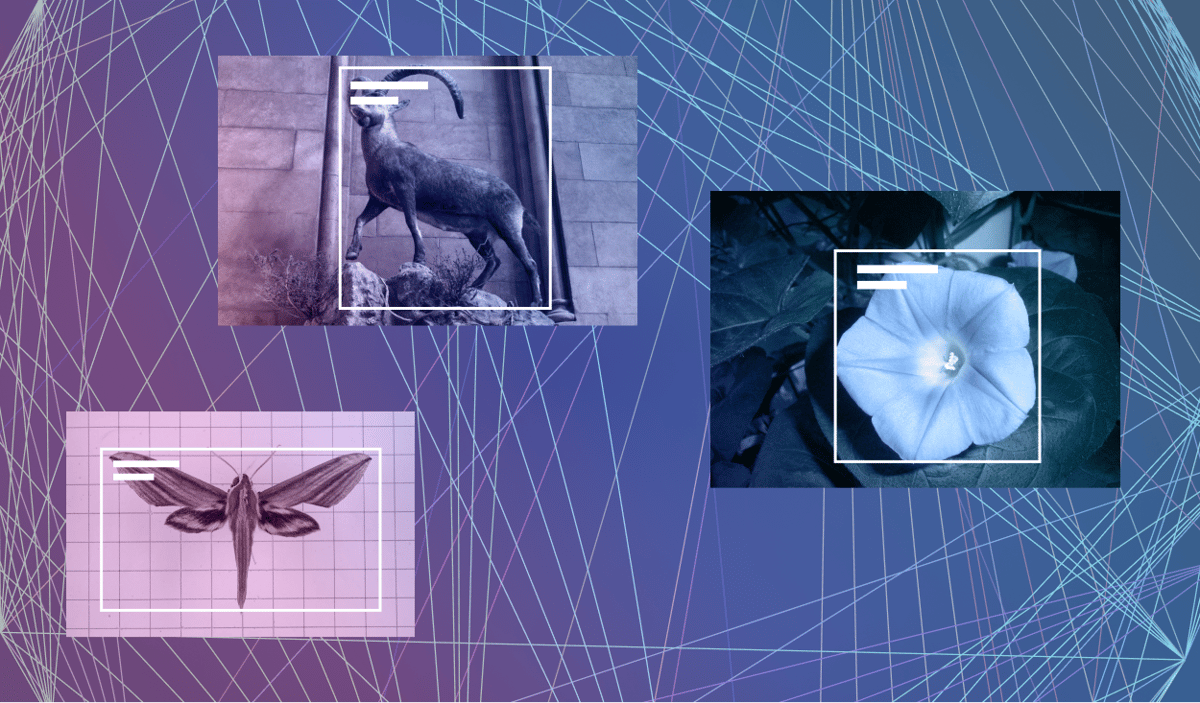

Although our dataset was designed to evaluate models trained on MSCOCO, researchers can follow a similar approach to create an NAO dataset for other target datasets or pretrained models. We created our dataset with images sourced from OpenImages, a large, annotated image dataset that contains 1.9 million images and 15.8 million bounding boxes across 600 object classes. To determine candidate objects that should be classified as naturally adversarial, we used a model trained on MSCOCO to predict object bounding boxes for each image and then identified 43,860 images that contained at least one object that was misclassified with a high degree of confidence. After identifying these images, annotators confirmed that the ground truth labels of these objects were correct and filtered out any ambiguous object that would be difficult for a human to identify.

After this process was completed, annotators went through the remaining images and labeled and placed bounding boxes around all objects included in the MSCOCO data labels. This produced a final dataset that consisted of 7,934 images and 9,943 objects.

The MSCOCO Dataset Has Blind Spots for Predicting NAO

After building our dataset, we began to explore the factors that contributed to objects being classified as naturally adversarial. We found that our NAOs reflected blind spots in the MSCOCO dataset.

The dataset includes images related to 80 object categories, including common objects such as horses, clocks, and cars. Because the dataset includes only images related to these 80 categories, however, it provides a biased sampling of all captured photos. The dataset does not contain a fish category, for example, so it contains a lower frequency of photos taken underwater compared to all captured photos.

We examined this sampling bias by comparing the distribution of features across three datasets: an OpenImages train dataset, the MSCOCO train dataset, and the NAO dataset. When we visualized these distributions, we found that numerous regions had a lower frequency of samples in MSCOCO than in OpenImages.

Comparatively, we found that the regions with a low frequency in MSCOCO tended to have a high frequency in the NAO dataset. This suggests that NAOs tended to take advantage of these low-sampled regions.

Detection Models Are Overly Sensitive to Local Texture but Insensitive to Background Change

When looking further into frequently misclassified NAOs, we found that models tend to focus too much on content within an object’s bounding box and too little on surrounding information. To explore the impact of background data on object classification, we generated new images based on the MSCOCO validation and NAO datasets.

For each object in these datasets, we generated six new images with their background changed to categories including underwater, beach, forest, road, mountain, and sky. When we then used a pretrained MSCOCO model to examine the class probability across the same object with different backgrounds, we found that the change in probability across images was small, regardless of the background used.

While this robustness to background is ideal in many cases, it also means that the detection models don’t take into account potentially useful background information, such as unlikely combinations between a predicted object and its background. For example, if an airplane and a shark have a similar appearance, the model might misclassify the shark as an airplane. By taking into account the underwater background, however, a model could decrease the probability that the object belongs to the airplane class.

Also, standard detection models show a strong bias to local texture. When we performed patch shuffling on NAOs, reorganizing the sections of objects so that they were barely recognizable by shape, these objects were often still misclassified.

This result suggests that the detection models were overly sensitive to texture and small subparts of these objects rather than the shape of the objects as a whole. For example, when we took a shark image that was misidentified as an airplane and performed random patch shuffling, the resulting object was still misclassified as an airplane.

How to Use the NAO Dataset

Because deep neural networks can be vulnerable to attacks from adversarial objects, the NAO dataset is a helpful resource for examining the robustness of these models. You can adapt the techniques covered in our paper to build datasets aimed at evaluating other target data or to create datasets aimed at evaluating other target datasets or models.

By recognizing dataset blind spots and identifying exploitable model weaknesses, researchers and engineers can create deep learning solutions that are more robust when applied to adversarial objects.

Learn more

- To learn more about how you can build your own NAO dataset and evaluate your model’s performance on NAOs, check out the full paper, Natural Adversarial Objects.